Do not be confused about the following introduction.

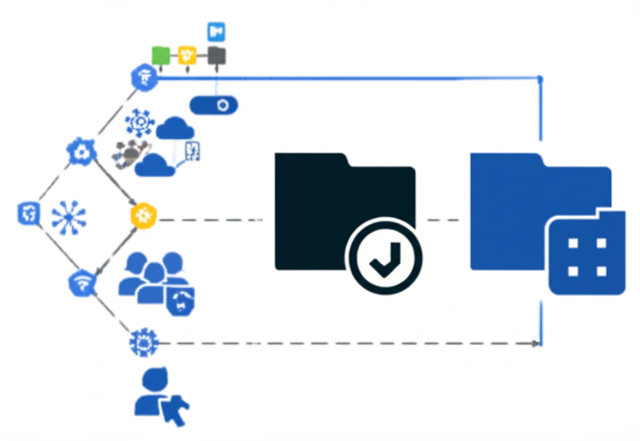

This post *is* about app-management enabled folders.

But before explaining what they are and how you can make one, it is important to quickly refresh what the term “folder” means in the context of Google Cloud.

If you have used Google Cloud you know about Google Cloud projects.

According to Google Cloud resource hierarchy, any service resource (e.g. a virtual machine, GKE cluster or IP address) has a project as their parent, which represents the first grouping mechanism of the Google Cloud resource hierarchy.

When a user accesses Google Cloud using an organizational account ‒ a Google Workspace account issued by an organization’s administrator, they have access to additional levels of grouping: folders and the topmost ‒ organization.

Of course all access is pending appropriate IAM permissions. Folders allow to group projects and other folders to abstract company’s organizational or production hierarchies and control access to underlying resources.

Users can also access Google Cloud using personal accounts ‒ free accounts for individuals, created to access Google services like Gmail, Drive, and more.

However, these accounts limit access to resource hierarchy to the level of projects and its underlying service resources.