ADK’s Built-in Observability

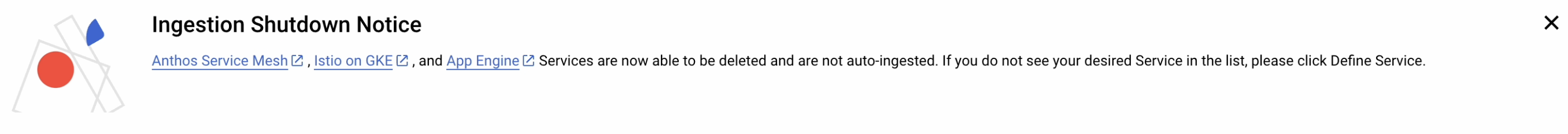

Have you ever found yourself grappling with observability instrumentation, even with tools like OpenTelemetry? This challenge becomes even more pronounced when developing Agentic applications, where understanding internal workflows is paramount. There are many frameworks that help developers to create an Agentic application. I’d like to review what observability data is generated when developers use the Agent Development Kit (ADK) from Google. ADK framework comes with logging and tracing instrumentation out-of-the-box.